Serverless T-Pot Honeynets on AWS

Serverless honeynet powered by T-Pot, AWS Fargate & CDK

Ant Burke, February 2021

ant.b@camulos.io

Introduction

This is a short article about some rapid prototyping from a recent maker session here at Camulos.Honeynets and honeypots describe technologies intended to mimic real world servers, systems and services to lure attacks from hackers, allowing cyber defenders to observe threat actor actions, leading to new cyber threat intelligence (CTI). Check out the awesome https://www.honeynet.org/ for lots of resources in this area.

The T-Pot project is a fantastic open source project creating usable and powerful honeynet tech. If you don't know about T-Pot, checkout the top notch project here: https://github.com/telekom-security/tpotce

When I first came across the T-Pot about 2 years ago, I was impressed. I wanted to give it a go, simply to check out the features and get a glimpse of the data generated by the system - just not quite enough to setup and run an instance on my home kit. At the time, I wrote a bunch of AWS CLI scripts deploy the T-Pot VM ISO to an EC2 instance, configure necessary AWS permissions (security groups etc) and ran it for a few hours watching the threat alerts bleeping on the cyber-style Kibana dashboard.

With that done, I was able to explore and tinker and get that warm fuzzy feeling of learning something new... before tearing it down to save on my EC2 bill. Running T-Pot in an EC2 was the quickest way I could think of to experiment with this cool new project in AWS.

When I revisited T-Pot at a recent maker day session, it was clear that the T-Pot community has put a lot of effort into new cool features. One area that stood out was a set of automated cloud deployments, including AWS and OpenShift. I scanned over their AWS terraform and noticed it was (basically) doing the same thing as the AWS CLI script from times gone by - deploying the T-Pot ISO to a EC2 instance and setting up security groups correctly to enable the right accesses.

A lot has happened in AWS land since I was scripting EC2s, and two developments stand out - AWS Cloud Development Kit (CDK) and Fargate:

- AWS Cloud Development Kit (CDK) was released in November 2019, giving devs the power of infrastructure as code without CloudFormation or Terraform volumes of JSON/YAML. Define your AWS stack in Python/Java/TypeScript and watch it come to life with a simple 'cdk deploy' command. Neat.

- AWS Fargate offers serverles container runtimes, which means deploying and running containers without the need to manage EC2 estate, giving savings of setup and sustainment. Fargate recently gained the ability to use Elastic File System (EFS) storage for container persistent storage - perfect to replicate T-Pot logging and Elastic stack.

Why do this?

There are lots of reasons why I thought this was a nice idea for some rapid prototyping, and here are my main reasons:- Exploring new tech that could support advances in automated cyber defences is always worthwhile.

- Scattering honeypots across IP space. Running containerised honeypots on a public IP address, and being able to spin-up/teardown with ease might help keep scanners busier than multiple unrelated honeypots operating on a single IP address.

- Open up interesting analysis themes. Running T-Pot creates data that I'd like to explore in the context of how honeynet target information spreads.

- Keeping skills sharp. Hands on work with new tech and new features helps us to stay current with one of our primary areas.

- Enjoyment and curiosity. This really is our cup of tea!

What did we do?

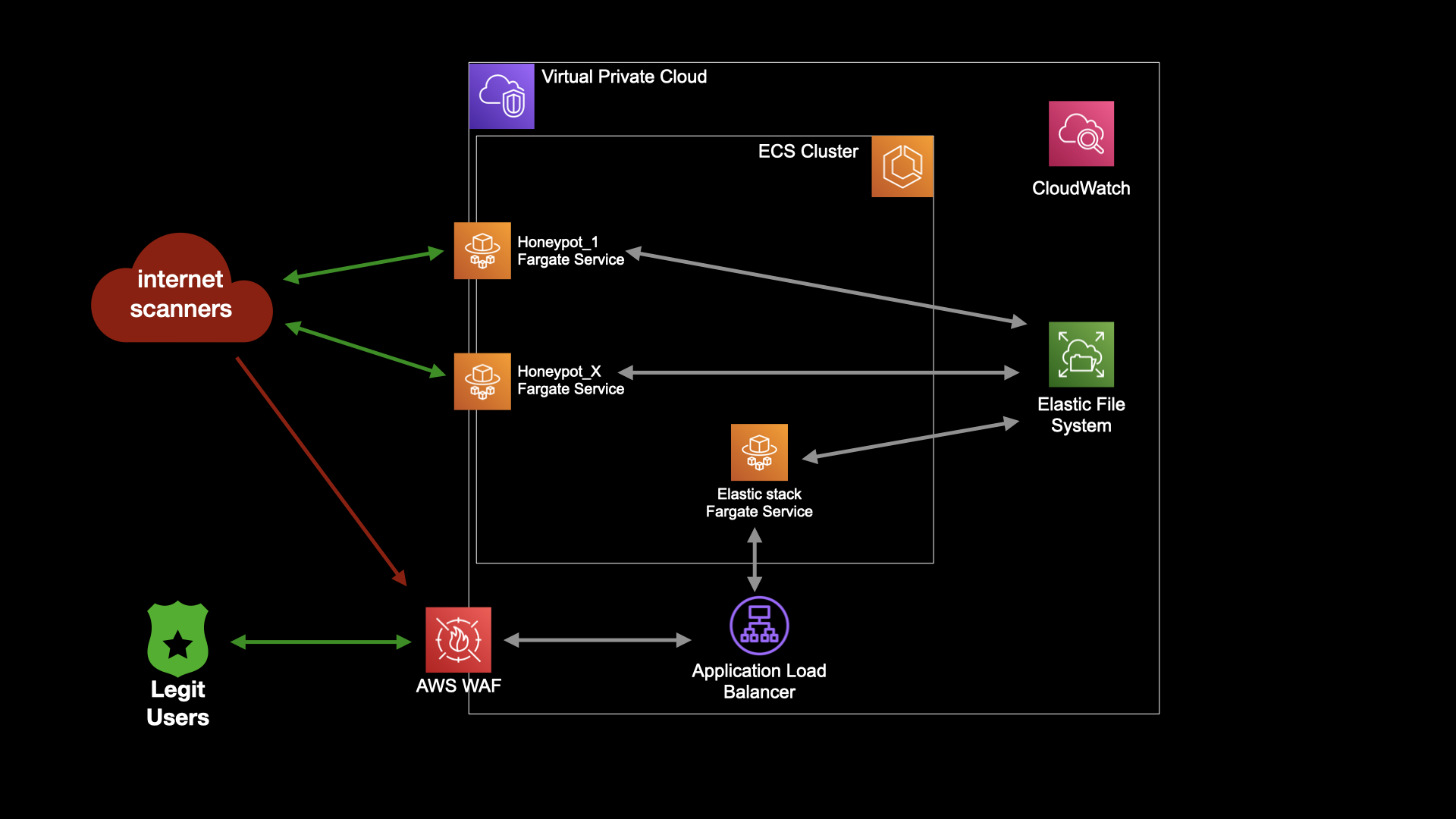

I managed to create a functioning deployment running each honeypot as a distinct, running the Elastic stack as a single service and using ALB to provide basic security to our Kibana dashboard.Here is a picture of what was built:

Simple architecture view of serverless T-Pot deployment, created using AWS CDK

Reflections

It is always worthwhile looking back at what you've done and considering the good and bad. Here are my reflections on a very interesting bit of tech dev:

- EFS + ECS + CDK was not easy. Using very new Fargate EFS support allows the reuse of honeypot images as ECS services, with minimal reconfiguration. However, I didn't find it as easy as simply following a tutorial and hitting ‘cdk deploy’. Lots of trial and error for IAM, security group, access points, posix users and file system permissions to things working. I ended up using one EFS access point per 'host' folder setting posix permissions set to 0:0 (uid:gid).

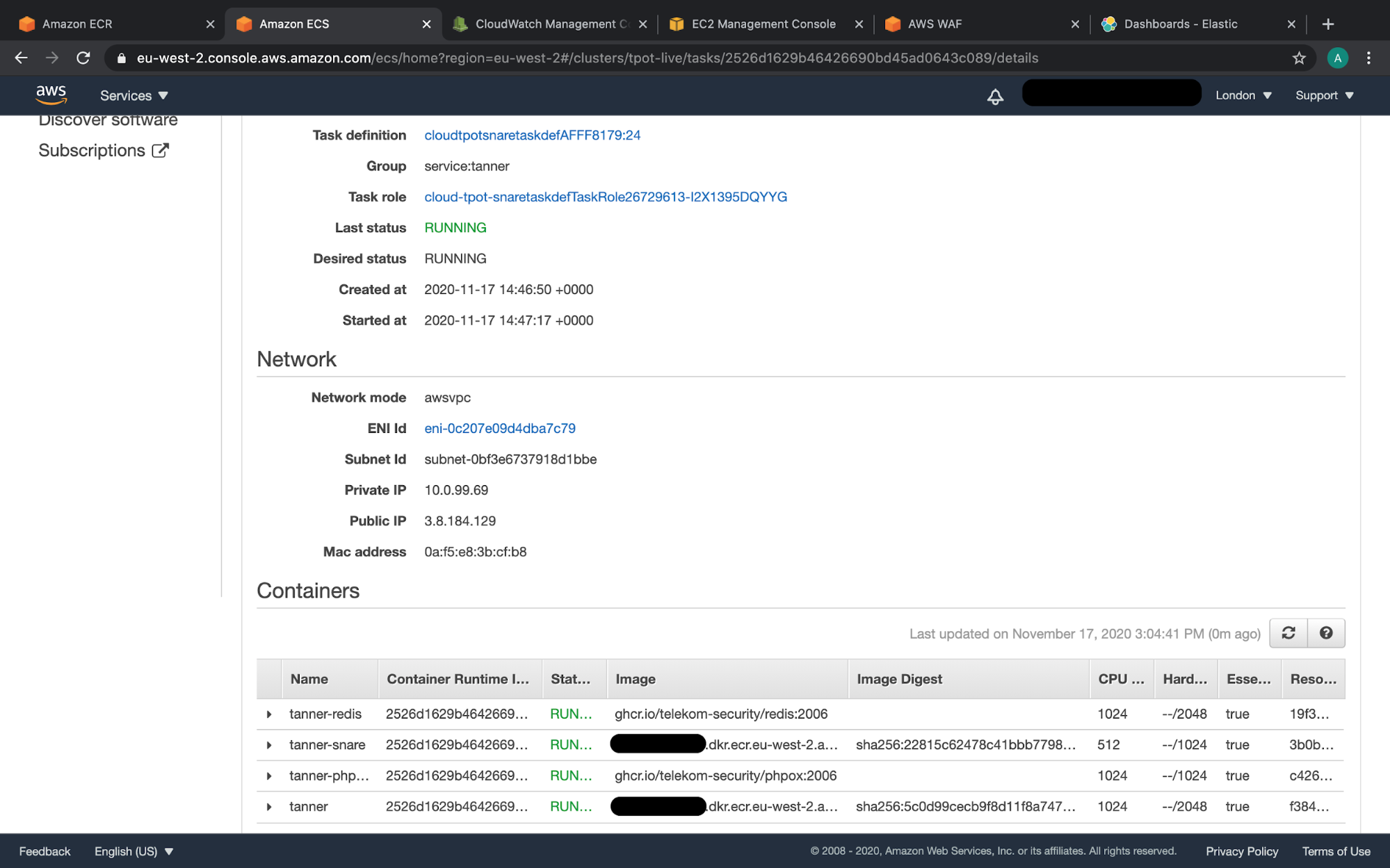

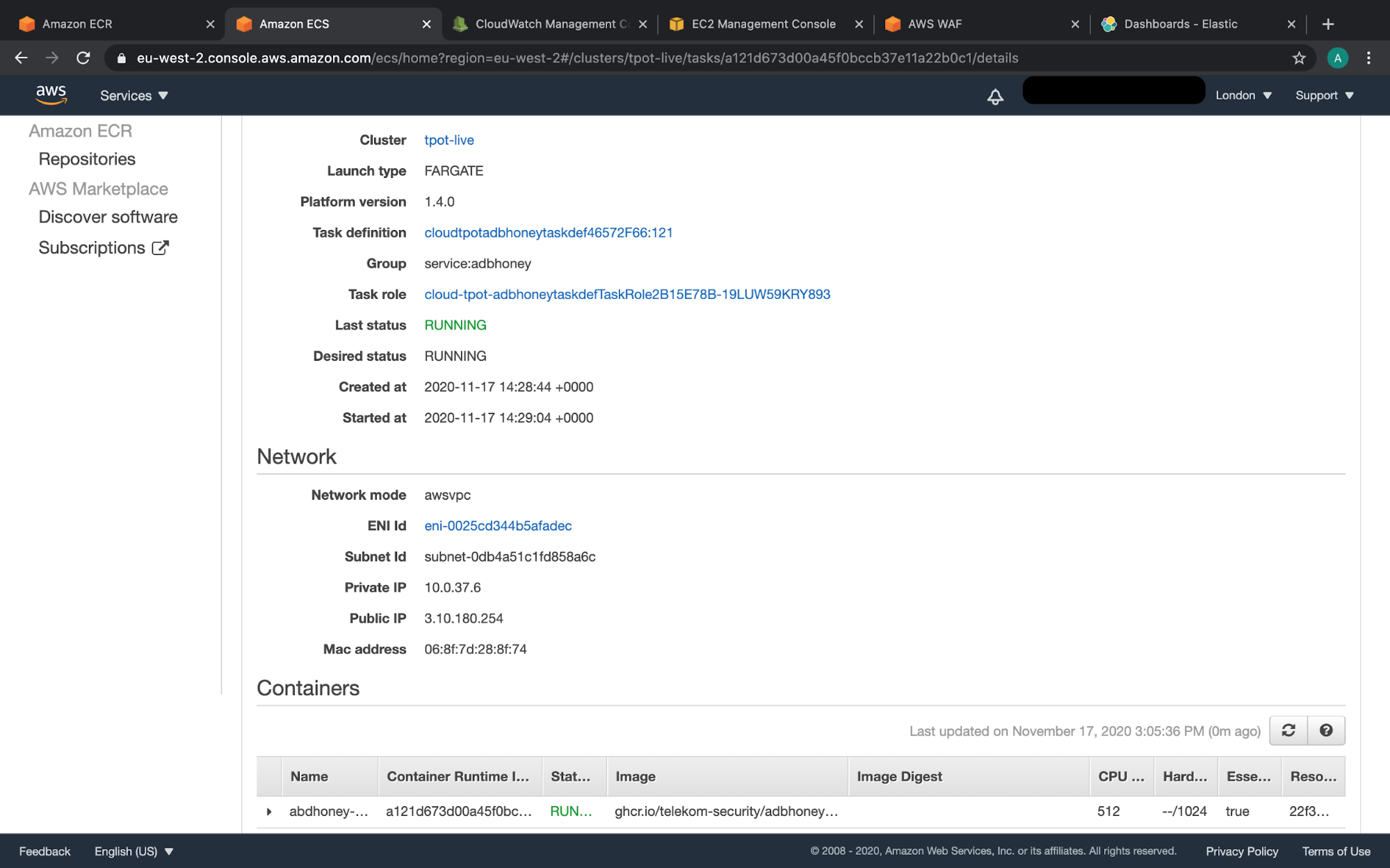

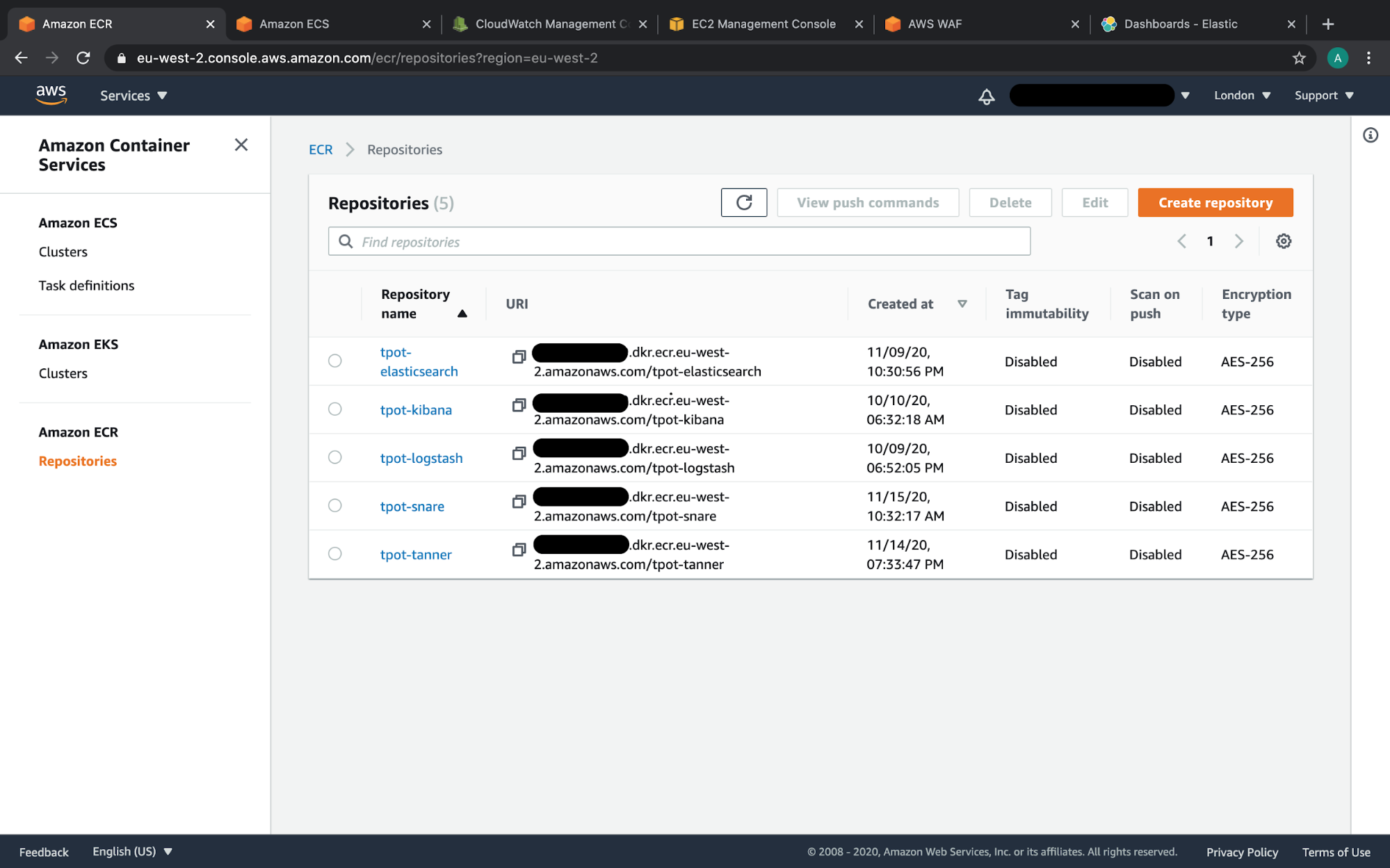

- Fargate awsvpc networking. ECS Fargate tasks are able to communicate via localhost, but networking between ECS services requires the use of cloud map service discovery for hostnames. This breaks some of the assumptions and hard-wired settings in the T-Pot Elastic stack containers, and meant spending a few weekends cranking through dockerfiles to make them fargate-friendly and storing these bespoke images in AWS Elastic Container Registry (ECR).

- Give me your public IPs! You can quickly bump into the elastic IP address service limits when scaling out (see https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/elastic-ip-addresses-eip.html). It's possible to request a service upgrade to increase this limit - I didn't do that as this is a prototyping effort but may try it for future iterations.

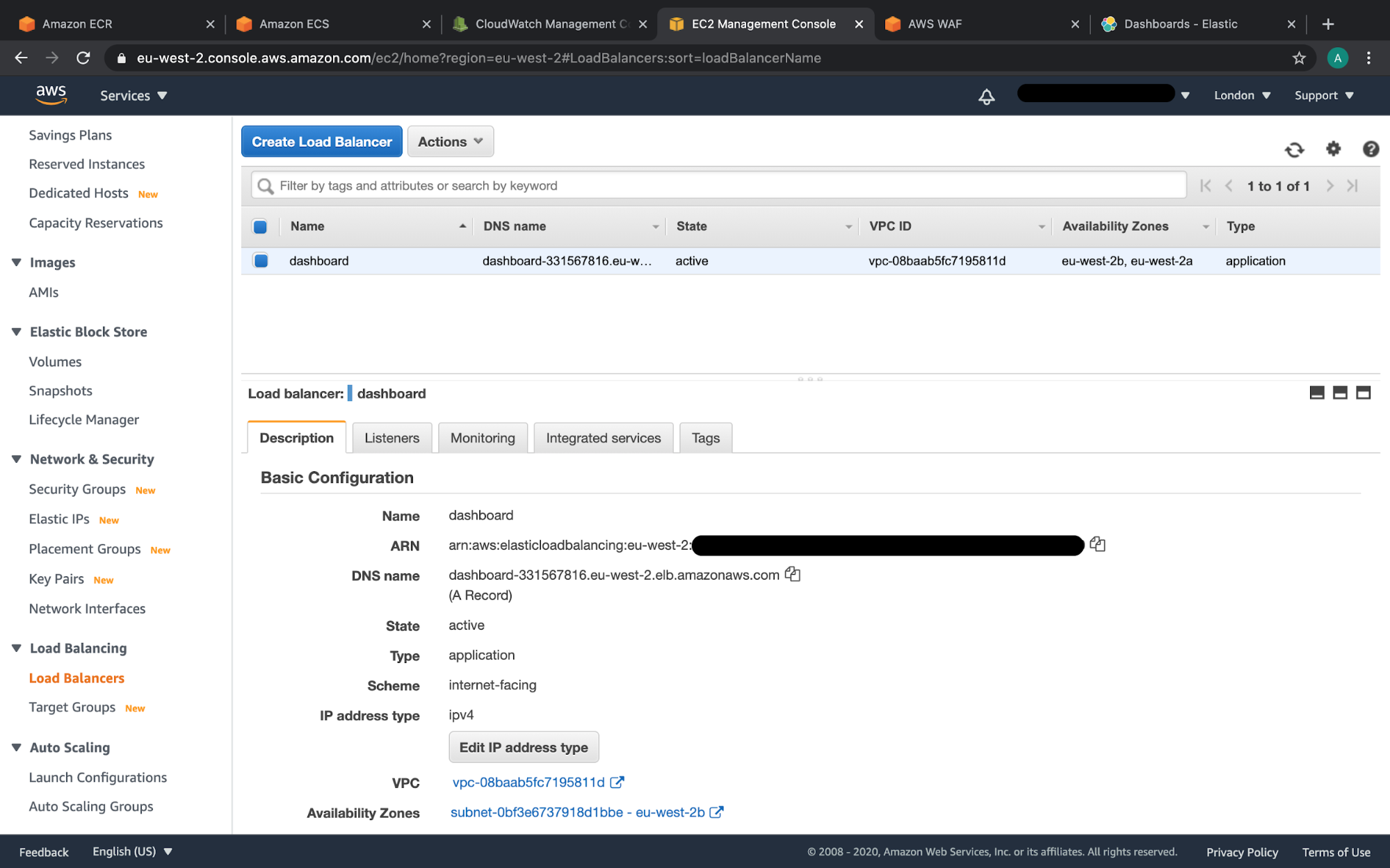

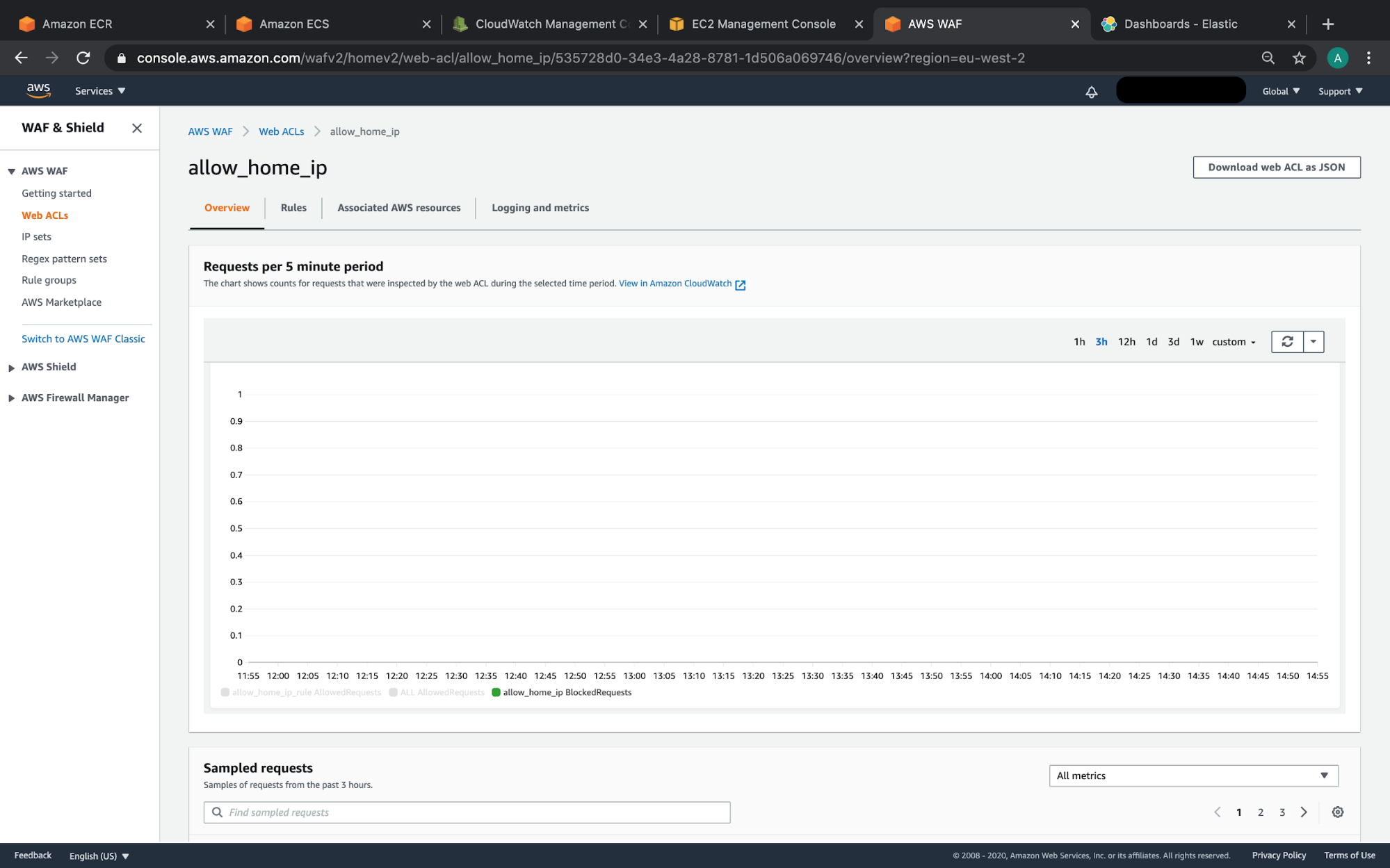

- AWS Load balancing. We were free to use AWS Application Load Balancer (ALB) service, doing away with the need for Ngnix container for load balancing. WAF isn't yet a level 2 construct in CDK which is a bummer, I resorted to manually creating an IP whitelist via the AWS console.

- Security. CDK works with the 'least permission' principle which is fantastic. To make this deployment function it wasn necessary to manually configure a bunch of fine grained, port by port control over in/out traffic for each ECS service for NFS and ECR traffic and IAM permissions for ECR access.

- Complexity. The end result is more complex than the self-sufficient, neatly integrated ISO but offers a readily scalable, dynamic deployment that does not rely on any virtual machines or servers that require patching and maintenance.

Get in touch!

Feel free to drop me a line (ant.b@camulos.io) if you like what you've read, have any questions or would like to build exciting new technologies!

Screenshots, Screenshots, Screenshots!

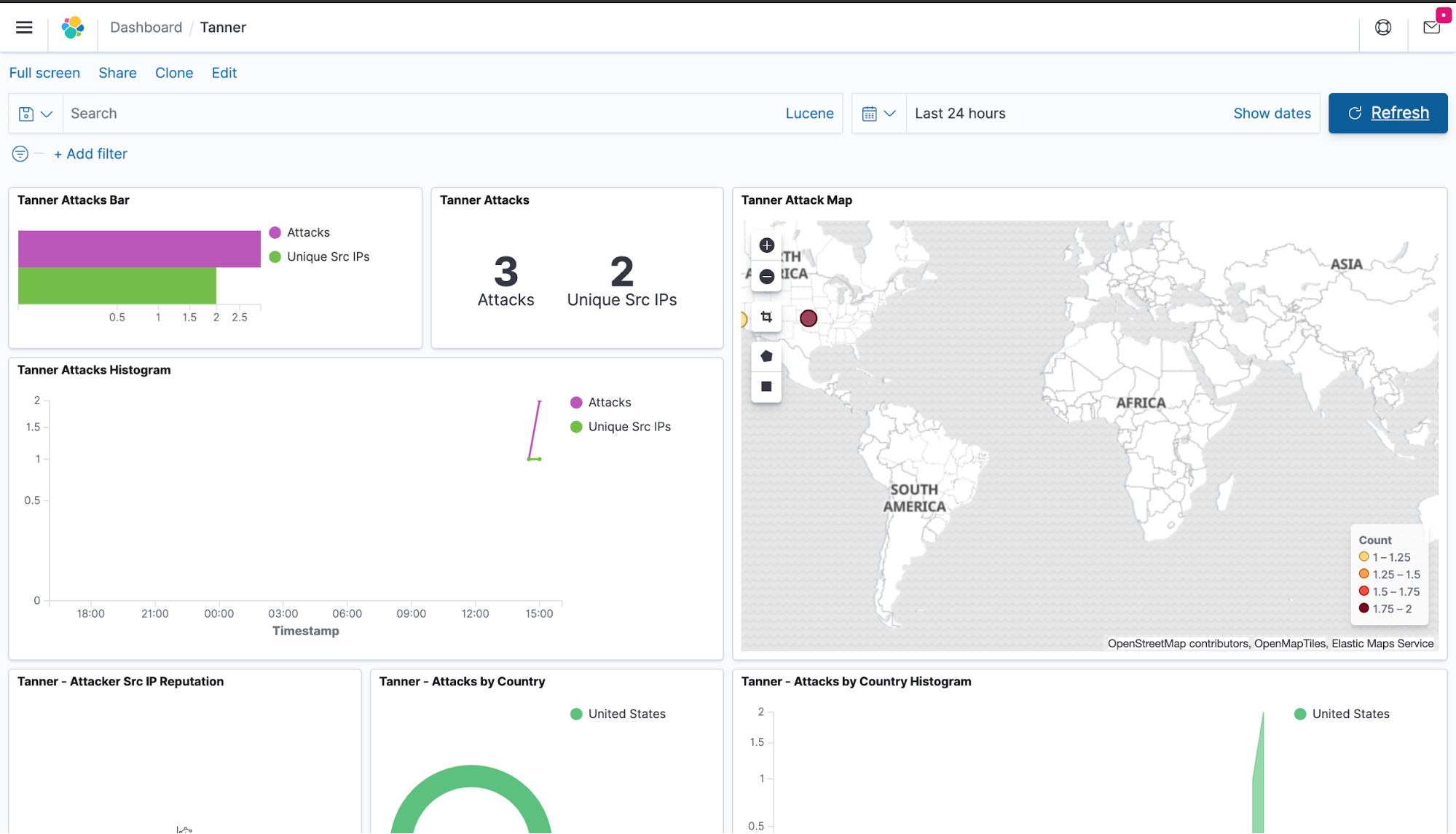

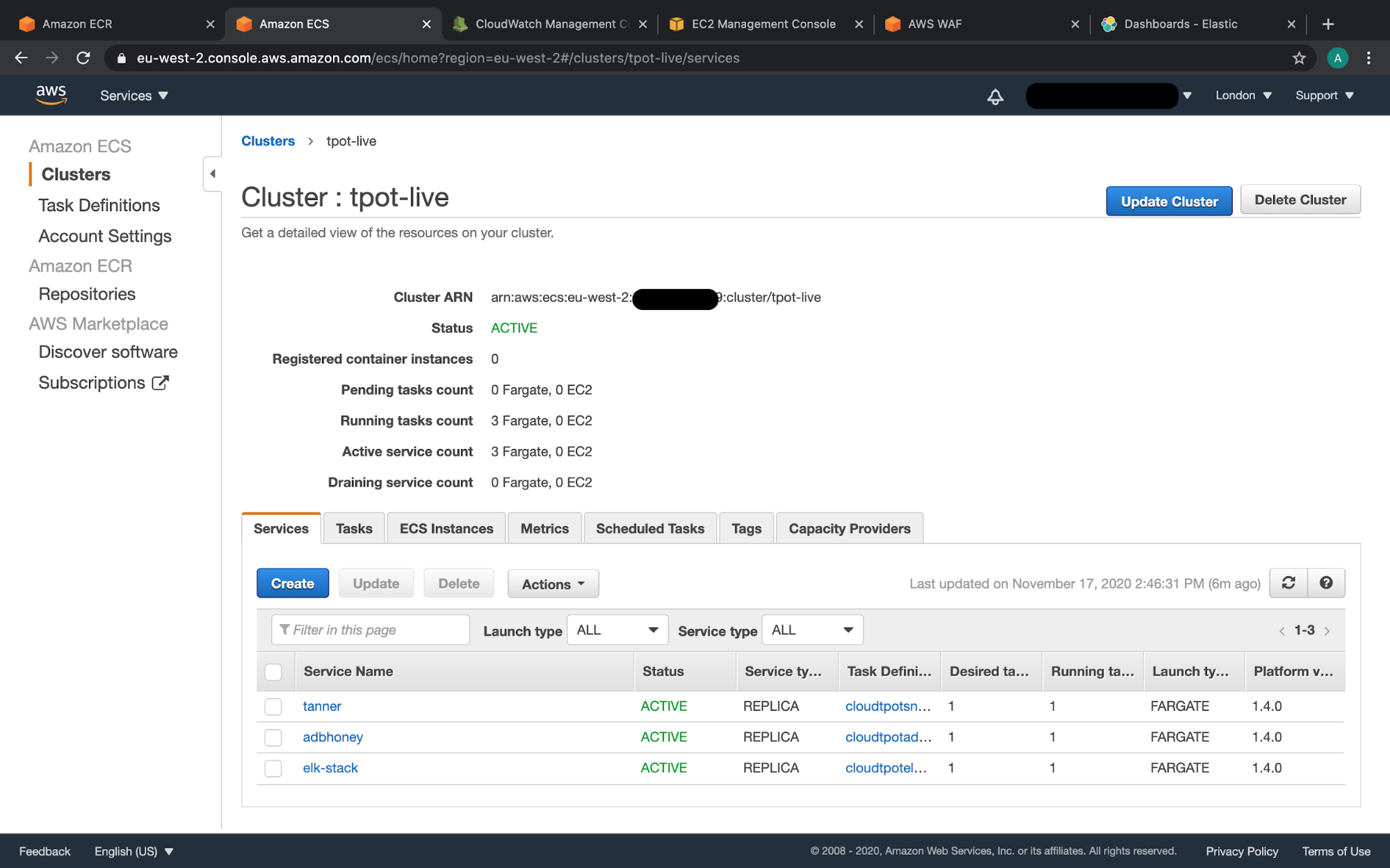

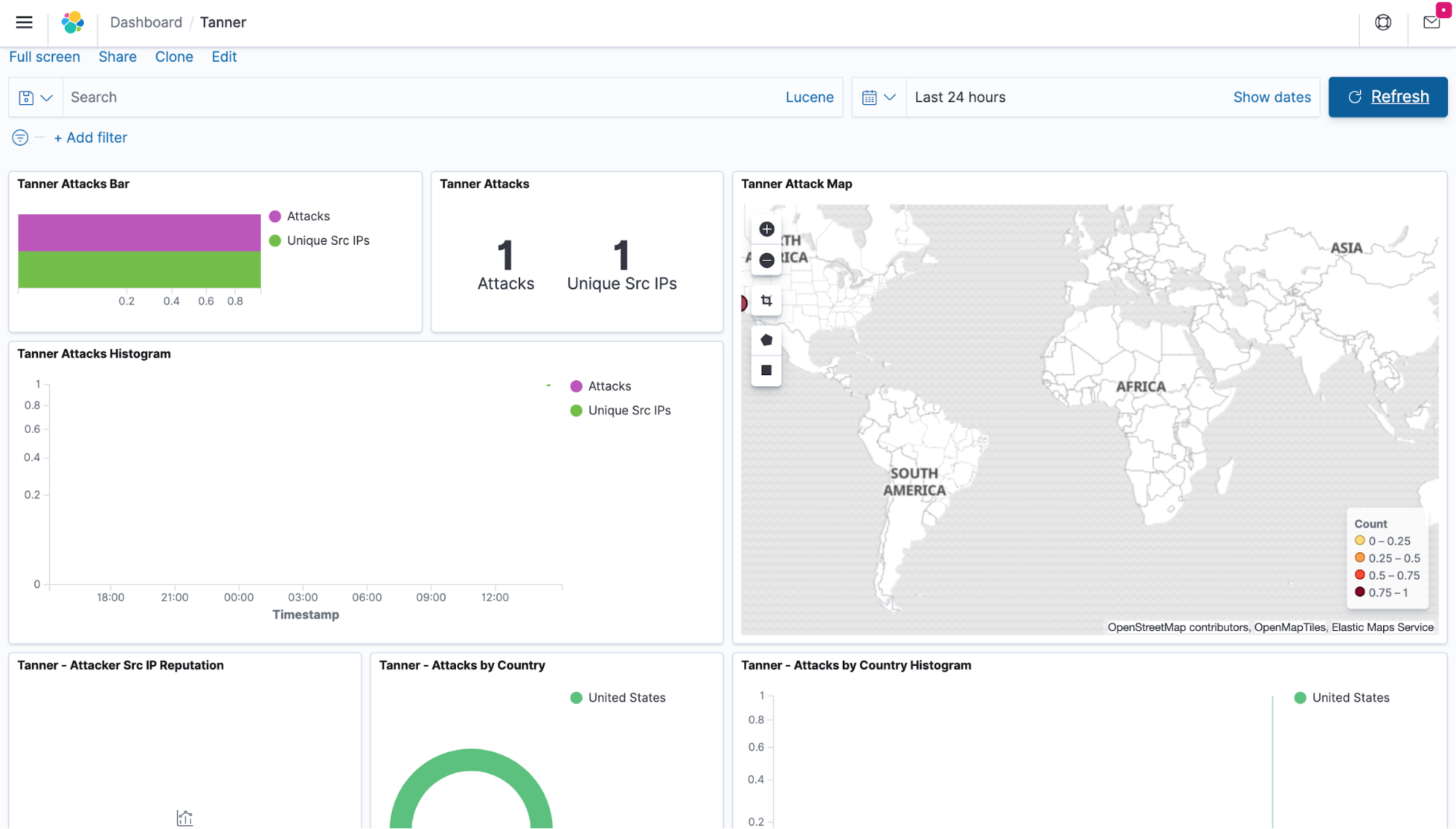

Pictures are always nice! While they might not be the most visually compelling images ever, they do show the results of the docker image build and push + CDK deployment.

Screenshot of the T-Pot kibana dashboard showing attacks logged in real time

AWS console showing the ECS cluster configured for T-Pot containers

AWS console showing Application Load Balancer routing to T-Pot Kibana dashboard ECS service

AWS console showing Web Access Firewall with a IP allow list

AWS console showing ECS services running T-Pot modified containers, images stored in ECR

AWS console showing adbhoney honeypot running as ECS services, using modified image stored in ECR

AWS console showing ECR repositories for containers that required modified Docker builds or configuration due to Fargate networking

Screenshot of a fresh T-Pot cdk deploy... happy times for all of about 3 seconds!